Publications

2021

2019

The combination of deep learning with reinforcement learning and the application of deep learning to the sciences is a relatively new and flourishing field. We show how deep reinforcement learning techniques can learn to solve problems, often in the most efficient way possible, when faced with many possibilities but little information by designing an algorithm that can learn to solve seven different combinatorial puzzles, including the Rubik's cube. Furthermore, we show how deep learning can be applied to the field of circadian rhythms. Circadian rhythms are fundamental for all forms of life. Using deep learning, we can gain insight into circadian rhythms on the molecular level. Finally, we propose new deep learning algorithms that yield significant performance improvements on computer vision and high energy physics tasks.

2018

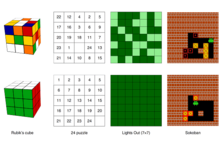

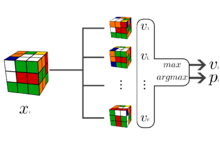

Recently, Approximate Policy Iteration (API) algorithms have achieved super-human proficiency in two-player zero-sum games such as Go, Chess, and Shogi without human data. These API algorithms iterate between two policies: a slow policy (tree search), and a fast policy (a neural network). In these two-player games, a reward is always received at the end of the game. However, the Rubik’s Cube has only a single solved state, and episodes are not guaranteed to terminate. This poses a major problem for these API algorithms since they rely on the reward received at the end of the game. We introduce Autodidactic Iteration: an API algorithm that overcomes the problem of sparse rewards by training on a distribution of states that allows the reward to propagate from the goal state to states farther away. Autodidactic Iteration is able to learn how to solve the Rubik’s Cube and the 15-puzzle without relying on human data. Our algorithm is able to solve 100% of randomly scrambled cubes while achieving a median solve length of 30 moves—less than or equal to solvers that employ human domain knowledge.